Fine-tune and deploy open source models

Fine-tune and deploy Stable Diffusion, LLaMA v2, Falcon, and more – all from an easy-to-use API.

Everything you need to make models your own.

Dataset API

Programmatically upload, manage and validate datasets for tuning your models. Learn more

Fine-tuning API

Fine-tune open source models with ready-to-use training scripts. Just bring your data. Learn more

Deployment API

Deploy fine-tuned models in one click onto serverless GPUs, no infra required. Learn more

Serverless functions

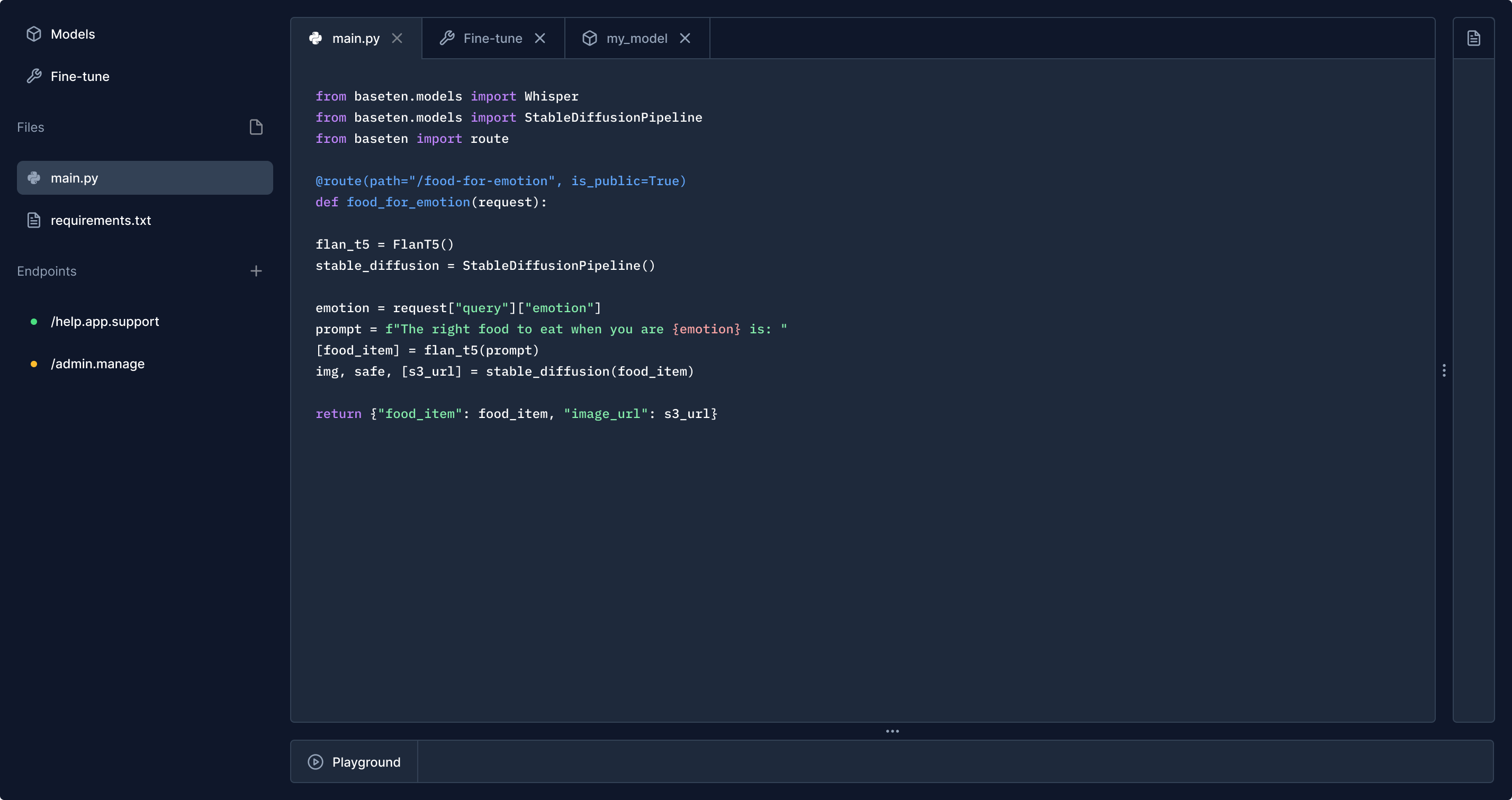

Write serverless Python functions and integrate you model with any of your applications. Learn more

Fine-tune any model

Just bring your data

Stable Diffusion training config

{

"batch_size" : 4,

"learning_rate" : 1e-6,

"learning_rate_schedule" : cosine,

"warmup_percentage" : 10,

"requires_grad" : ["unet", "vae"]

}Deploy and scale your models

import baseten import StableDiffusionPipeline

# Invoke your model

model = StableDiffusionPipeline(model_id="rwnod2q")

image url = model("a photo of sks dog playing in the snow")

image.save("dog-snow.png")

Ship your models

Building with Blueprint

I fine-tuned FLAN-T5. Can it cook?

I used Blueprint to train FLAN-T5 to generate a recipe for any dish I could dream up.

Build an avatar generator

In less than 200 lines of code you'll build a simple version of an avatar generation app like Lensa using Blueprint.

Fine-tuning with Dreambooth in Figma

See how to build a FigJam plugin to fine-tune and invoke Stable Diffusion with Dreambooth directly from Figma.

Ready to get started?

Start your project with 4 hours of free fine-tuning and model serving GPU credits.